Hello, the GSoC is at it’s end and it is time for me to summarize my work in the past 12 weeks. In case you only want to see the code, here are the pull requests: Mission Supernova, Text to speech, Encoding conversion.

Projects

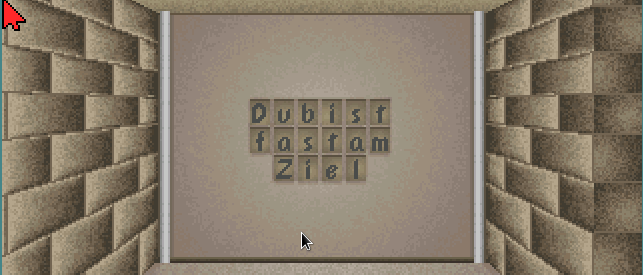

Mission Supernova

As the first project, I worked on an engine for Mission Supernova 2. The project description can be found here

Text to speech

Because I finished the first project early, I chose to work on a text to speech project next. The task was to implement text to speech support for ScummVM for at least 2 platforms and then use this feature in the GUI for people with reduced sight or in the Mortevielle engine. The whole project description can be found here

Encoding conversion

Because there was still a little bit of GSoC left, I started to look for another project to work on. While working on the text to speech project, I needed to convert between different character encodings (to UTF-8 on Linux, to UTF-16 on Windows). I implemented some conversion there, but it wasn’t perfect. On another pull request, that improved cloud support in ScummVM, there was also an issue with encodings and there was a short discussion about encoding conversion on the IRC. So I decided to add a way to convert encodings to ScummVM.

What the GSoC gave me?

In this GSoC, I had the opportunity to experiment with quite a lot of new things for me: text to speech synthesis, encoding conversions, creating MIDI sounds, multi-threading, reading through old C code (Kernighan and Ritchie function declarations, a lot of gotos, jumps, quite a bit of assembly), I got better at VIM, I learned more things with git and a lot more. I also had to familiarize myself with quite a bit of the ScummVM’s code base. I got used to program 8 hours per day, which will, along with the experience in programming I gained, surely help me with my school projects next semester. And the most important thing: I memorized like 30 hours of metal songs 😀

What’s next?

I would like to enjoy the rest of the holidays before the school begins again, so I won’t work as much, but I certainly want to fix anything not working in my code. After this I will probably have a lot of work with my school projects, but I certainly want to find at least a little bit of time throughout the school year to work on another project for ScummVM, maybe another engine, but I don’t know yet.

This is probably my last blog post for some time, because I am not a person, that would write a blog post, unless it is really interesting or important, so good bye readers.