In the past week I’ve been working on the implementation for the 2D blitting API for TinyGL. As I’ve already explained and shown the design of the API I wanted to discuss its implementation in this blogpost.

At its core the API is implemented through a templated function called tglBlitGeneric:

template <bool disableBlending, bool disableColoring, bool disableTransform, bool flipVertical, bool flipHorizontal>

FORCEINLINE void tglBlitGeneric(BlitImage *blitImage, const BlitTransform &transform) {

if (disableTransform) {

if (disableBlending && flipVertical == false && flipHorizontal == false) {

tglBlitRLE<disableColoring>(/* params */);

} else {

tglBlitSimple<disableBlending, disableColoring, flipVertical, flipHorizontal>(/* params */);

}

} else {

if (transform._rotation == 0) {

tglBlitScale<disableBlending, disableColoring, flipVertical, flipHorizontal>(/* params */);

} else {

tglBlitRotoScale<disableBlending, disableColoring, flipVertical, flipHorizontal>(/* params */);

}

}

}

This function chooses the best implementation based on its template parameters (everything is computed on compile time so this boils down to a simple function call), the current implementation supports different paths optimized for some cases:

- tglBlitRLE

- tglBlitSimple

- tglBlitScale

- tglBlitRotoScale

tglBlitRLE is an implementation that optimizes rendering by skipping transparent lines in the bitmap (those lines are loaded in advance when the blitting image is uploaded inside TinyGL through tglUploadBlitImage) and is usually selected when blending and sprite transforms are disabled.

tglBlitSimple is an implementation that cover a basic case where the sprite has to make use of pixel blending but is not transformed in any way (ie. not rotated or scaled) but it can be tinted or flipped either vertically, horizontally or both ways.

tglBlitScale is used when scaling is applied (plus whatever is needed between blending, tinting and flipping).

tglBlitRotoScale is used when all the extra features of blitting are needed: rotation, scaling plus blending/tinting/flipping.

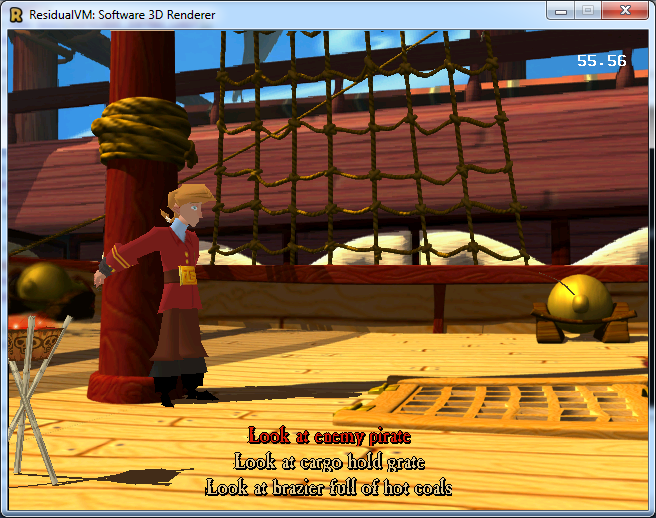

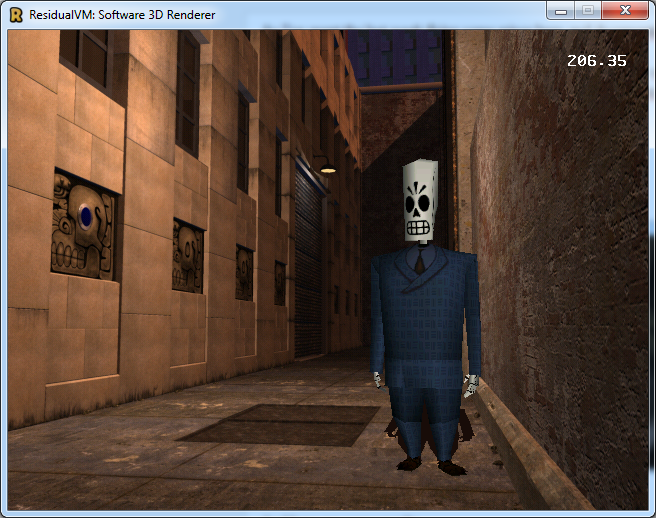

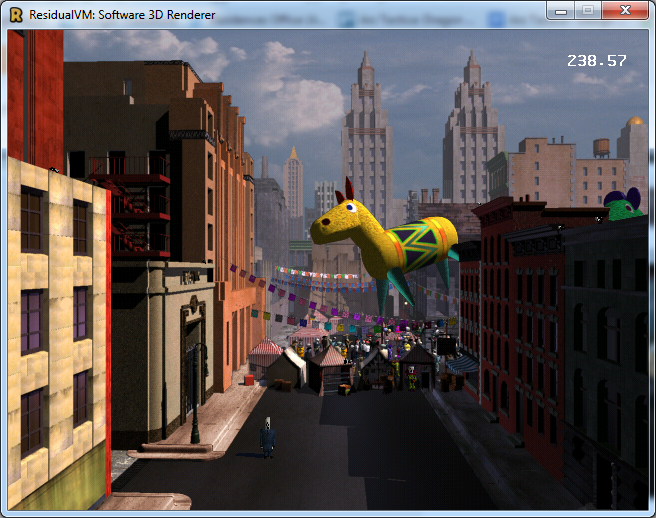

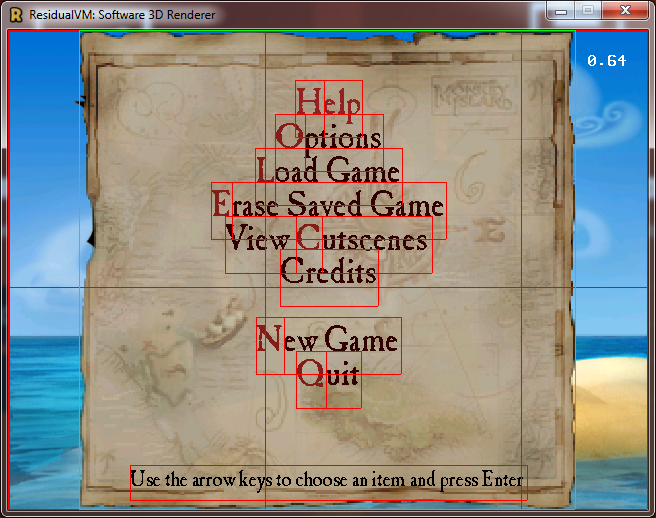

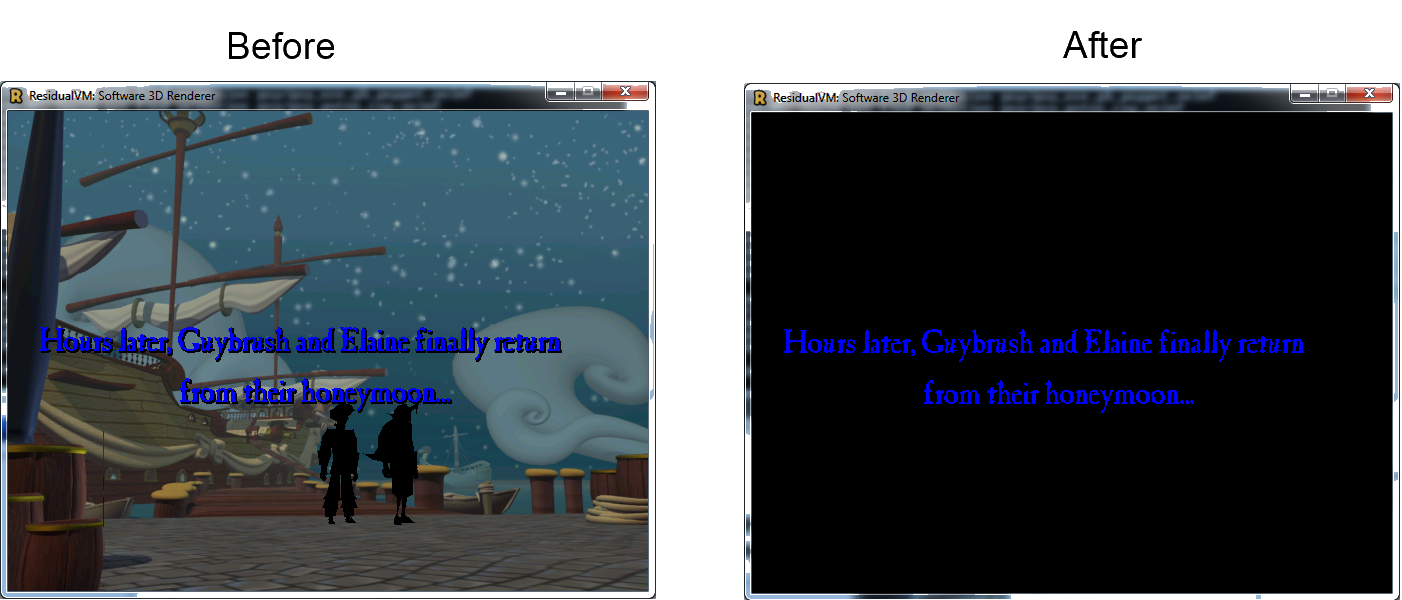

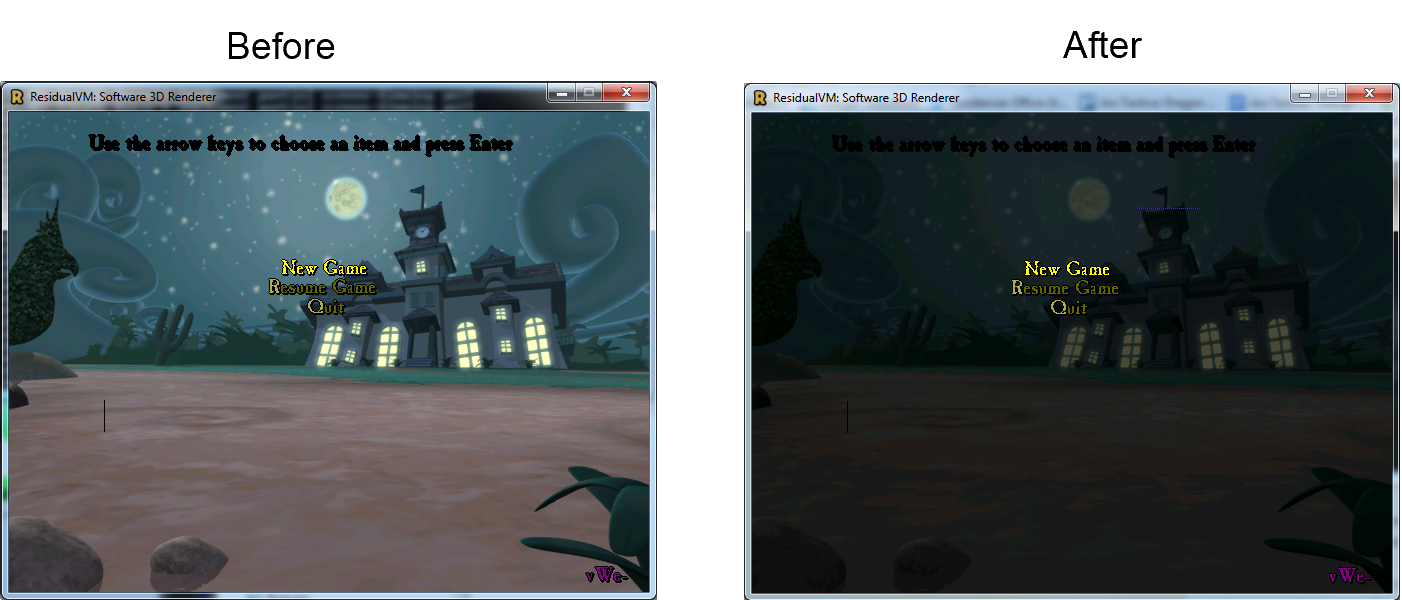

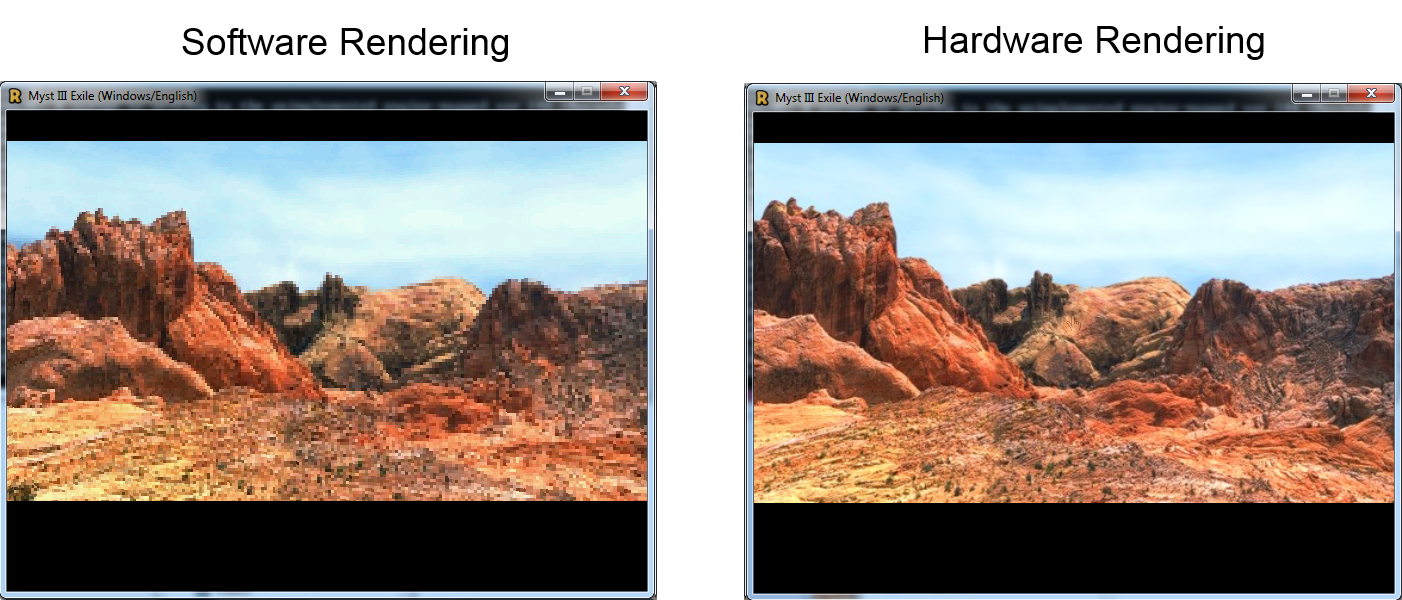

After implementing the API I also had to replace the existing implementation in both engines: Grim and Myst3, the ending result is obviously the same but the code is now shared between the engines and any optimization will benefit both from now on.

This code will also be beneficial for the implementation of my next task: Dirty rectangle optimization that consists in preventing a redraw of the screen if the contents haven’t changed, I will talk more about it and its design in my next blogpost this week.

Stay tuned!