My task for the next two weeks is going to be an implementation of a standard way of rendering 2D sprites with TinyGL.

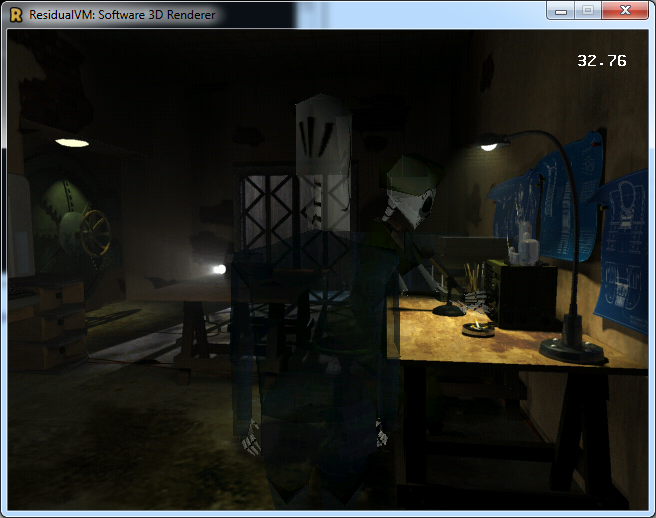

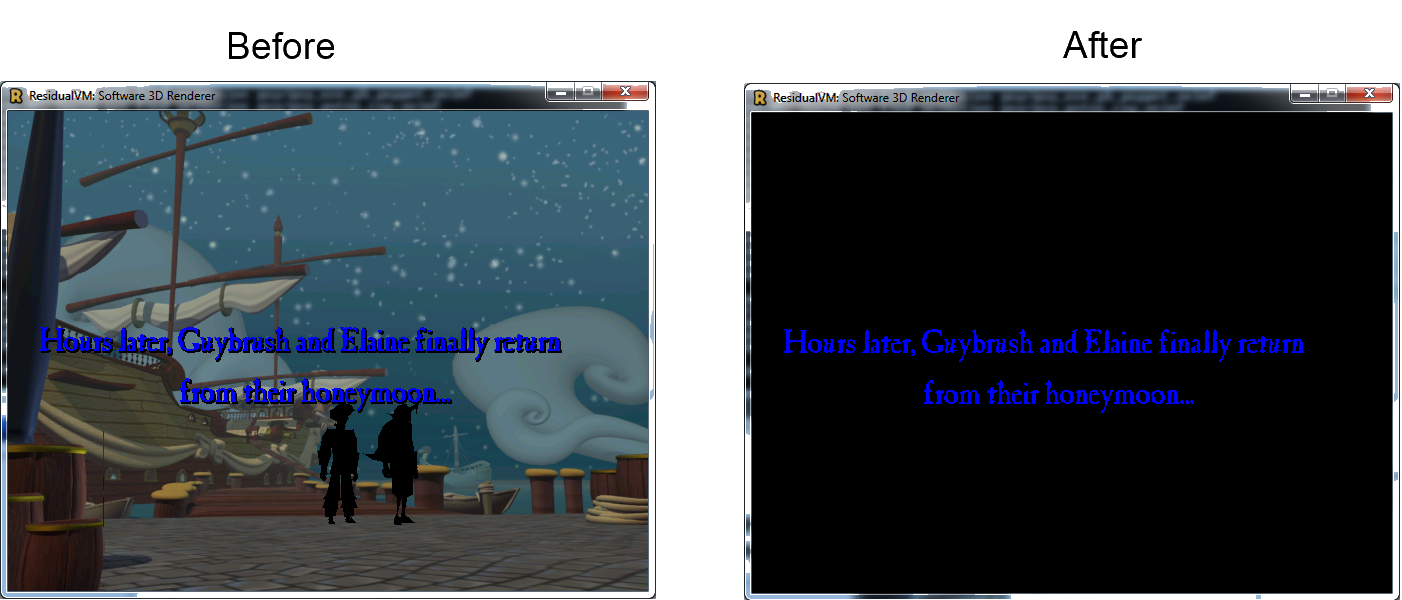

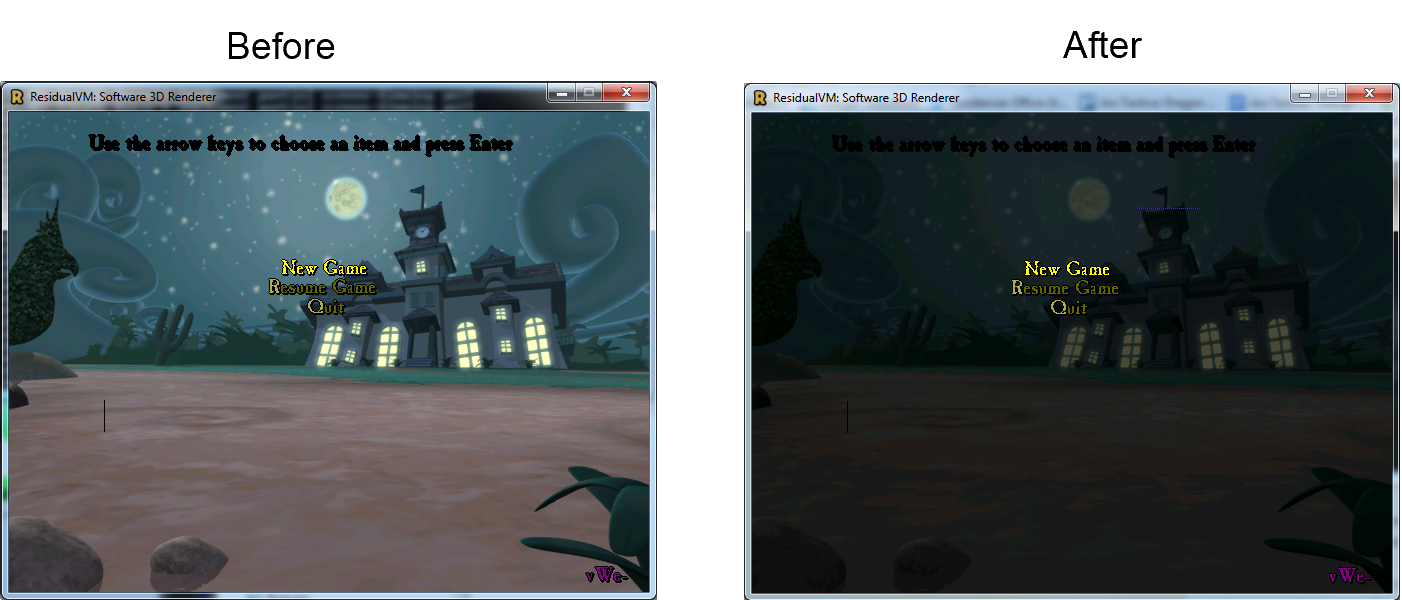

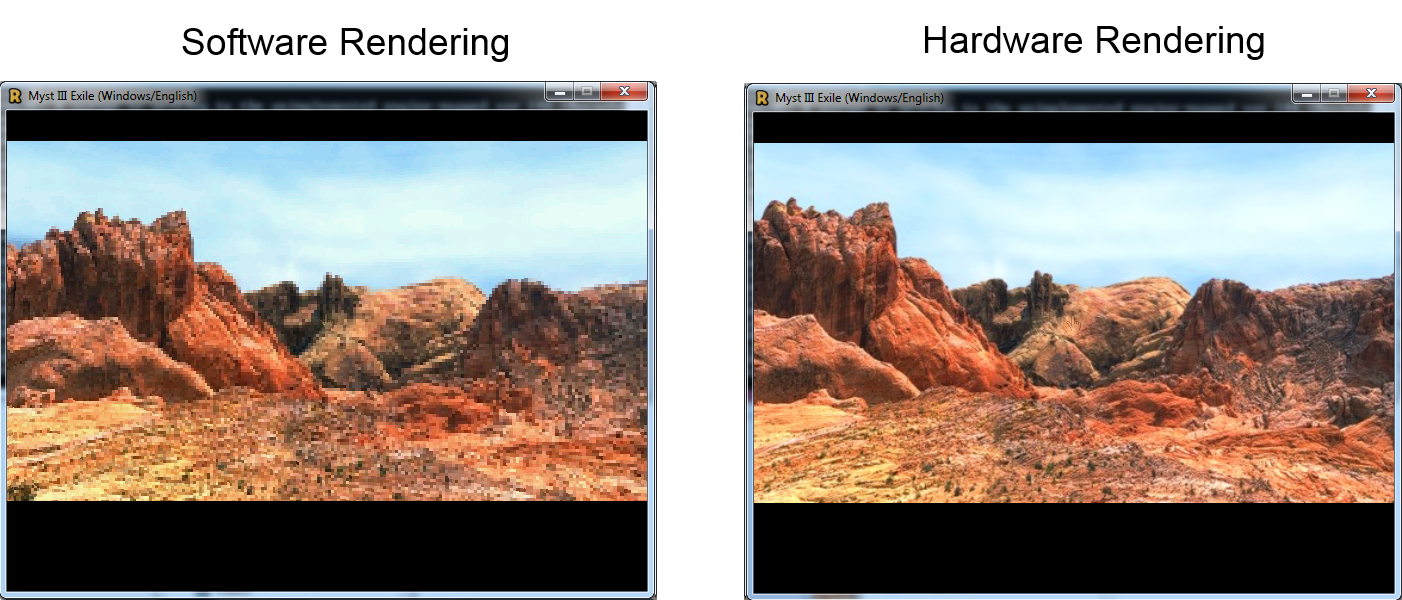

At the moment, whenever a residual game engine needs to render a 2D sprites, different techniques are employed; Grim Fandango’s engine supports RLE sprites and has a faster code path for 2D binary transparency whilst Myst3’s engine only supports basic 2D rendering.

Moreover, features like sprite tinting, rotation and scaling are not easily implemented when using software rendering as they would require an explicit code path (which is, currently, unavailable).

Having said that in the next two weeks I will work on a standard implementation that will allow any engine that uses tinyGL to render 2D sprites with the following features:

- Alpha/Additive/Subtractive Blending

- Fast Binary Transparency

- Sprite Tinting

- Rotation

- Scaling

This implementation will try to follow the same procedure adopted when rendering 2D objects with APIs such as DirectX or OpenGL (since all the current engines use openGL for HW accelerated rendering this seems a rather valid choice to make those two code paths more similar).

As such 2D rendering will require two steps: texture loading and texture rendering.

Texture loading will be needed also to implement features such as RLE faster rendering and to optimize and convert any format to 32 bit – ARGB (allowing to support a broad range of formats externally). Texture rendering will be exposed through a series of functions that allow the renderer to choose the fastest path available for the features requested (ie. if scaling or tinting is not needed then tinyGL will just skip those checks within the rendering code).

That’s it for a general overview of what I will be working on next, stay tuned for updates!